This report was published for the 1st time on StorageNewsletter February 24th.

Datameer, a structured data management leader, joined this edition to cover and refresh 2 essentials components of its cloud strategy. The company addresses an on-going complex and fragmented data silos, presence and format with a clear goal to aggregate them to facilitate search and access to them.

The first idea operated by Spectrum is to connect to multiple sources, move and transform data to build a cloud data warehouse and the second, provided by Spotlight, is about an unified virtualized access following a discovery phase, indexing and cataloging.

The 2 products can be used independently, Spectrum makes the connection between a wide variety of sources with more than 200 connectors and establish a pipe from them to a cloud data warehouse. This pipeline can monitored, secured and scheduled to enable transformation to feed this cloud data repository. With all these data collected and transformed, Spotlight uses some discovery and search techniques to catalog structure, metadata and models and enable some exploration via an unified access.

Both products are super visual, pretty rapid to learn and users can start rapidly to connect, import and access data to discover new information. They boost data scientists job and even potentially replace them at some companies as they were more associated to Hadoop, a story of the past that helped developed and grew this ecosystem. This time to data visualization is crucial and Spotlight makes this feasible with a capability to collaborate and share models between teams.

Fujifilm covered 2 main topics, of course the 580TB tape and Object Archive (OA).

Fujifilm, one of the main tape manufacturers, refreshed our mind with the 580TB capacity, a joint effort with IBM that can stores 48 times the capacity of LTO-8. It is the result of a new record in areal density reaching 317Gb per square inch powered by a new magnetic particle named Strontium Ferrite. It invites us to dream about hyper dense exascale tape library very soon now. Tape continues to be the media chosen by hyperscalers for cold data and long term preservation with a compelling TCO. In Europe, OVHcloud, IBM and Atempo have announced a partnership to promote cold cloud storage with IBM 3592 20TB tape.

Also with the violent ransomware period, tape represents a safe storage model with its air gap approach. The second key element to consider is the passive nature of the media with the energy savings it means, especially during the climate change pressure we all live.

Fujifilm unveiled OA version 2 what is also named Software-Defined Tape outside of US. OA is an on-premises object storage product exposing S3 connected to tape libraries to address long term archiving needs leveraging tape as a passive media. The main idea is to offer to enterprises a method to archive data over long term with tape media in the back-end.

The company understands that the market adoption of OA needs partnerships and the OA team has selected Cloudian, Caringo or NetApp StorageGRID to name the main ones. These players are listed in the last Coldago Map 2020 for Object Storage.

The S3 storage exposed via OA also requires to be integrated and compatible with horizontal data management and we expect some validation with 2 partners of Fujifilm, StrongBox Data and QStar, but also Hammerspace, Komprise, Point Software and Systems, Data Dynamics or Versity.

HYCU, a leader in SaaS backup, has made great progress in 2020 covering now more than 70 countries, more than 320 partners for more than 2000 customers. Progress are spectacular and the NPS score for HYCU passes now 91 which is not so common confirming users' confidence into HYCU, products and team.

The company chose again the tour to unveil its new product offering HYCU Protégé for O365. Recognized and largely adopted for its backup for Nutanix, the company has jumped rapidly into SaaS wave with a rapid track record supporting multi-cloud supporting on-premises and in-the-cloud environments, being agentless, application aware and of course multi-tenant.

This new extension to Protégé protects the full suite of Office 365 i.e Outlook, OneDrive, Teams, SharePoint and OneNote and Office 365 itself with classic Excel, Word... This is one of the differences with competition. Protégé leverages the journal feature of the application engine to provide a near CDP capability coupled with a high level of granularity. Advanced search is also provided to navigate within protected data fields. Data are encrypted in-transit and at-rest with industry standard methods, target also is aligned with compliance regulations.

The HYCU team iterates again for SAP HANA DR for GCP with new capabilities around scheduling, snapshot and recovery. HYCU really accelerates and should introduce some new stuff soon now as demand is hot for modern data protection for SaaS applications and container-based environments.

Komprise, a key player in data management, confirmed its momentum in 2020 with a solid growth in multiples areas - customers, volume of data under management and partners - and also new leaders onboard. In a special business climate with obvious pressure due to Covid-19, users wish to optimize their infrastructure and delay hardware purchase and Komprise has a real answer to optimize cost by reducing inactive data presence on primary storage.

The company has clearly modernized tiering for unstructured data covering NAS and S3 storage on-premises or in-the-cloud. The technology they develop avoid the classic stub approach and relies on intelligent symbolic links mechanism across storage file or object entities. But the product delivers more than a comprehensive tool as it offers deep analytics on the file and object environments fueled by Elasticsearch. Users understand their data landscape and what is going on with their storage consumption and usage.

With a clear desire to accelerate in Europe following a first penetration for a few years, the company has signed with Tech Data with a focus on UK, DACH and Nordics. A bit bizarre to ignore south of Europe and especially France as Komprise will let competition such Data Dynamics, StrongBox Data, Hammerspace or Point Software and Systems expand their footprint...

And last news, Komprise replication engine was chosen by Pure Storage for FlashArray file services, that complements the partnership already in place around NAS migration.

Nasuni, a reference in global file services, had a very strong 2020 fueled by a new financial round of $40 million for a total of $169 million that invited the company to join the storage unicorn club. As of today the firm managed more than 125PB and almost 27 billions files globally across its growing installed base. It translates into a ARR of $100 million, a key metric for a SaaS business.

The team has extended its platform capabilities swapping several classic NAS solution as the right platform for file-based use cases. It confirms what we said for several years that people starts with one uses case and time after time with the trust into the solution, they move more and more applications to it consolidating use cases and above all reaffirming the role of the product as a platform. These use cases span primary and secondary storage.

The company also appeared in the Coldago Map 220 for File Storage as a real challenger against other famous leaders. This is the result of regular growth and multicloud strategy that deliver today good results and effects.

The last announcement of a strong partnership with Google Cloud illustrates this momentum. Google has recognized its deep limitations in the domain having tried first with Filestore then Elastifile but need to fill rapidly the gap with its direct competition and adopt a established solution. Google will be very active co-promoting and selling Nasuni. The company insists on its model - file servies on top of public cloud object storage - with key TCO numbers adopted by large corporations distributed by nature. We'll see as Nasuni prepares some new iterations soon.

Pavilion Data, emerging leader in unified storage, had a remarkable 2020 marked by new product extensions and capabilities, new customers success with a significant progress on its installed base and new comers in the leadership team.

The adoption of its ultra dense 4U storage system confirms that the network dimension of this machine creates a huge differentiator against competition. The HyperOS 3.0 clearly move market positions and illustrates the market dynamic towards U3 - Unified, Universal and Ubiquitous - storage concept we introduced a few years ago. The team insists on the notion of platform being a central critical element of any enterprise who relies on IT.

At several levels, the platform demonstrates strong DNA both in term of design, features, operations and results. The company sees adoption in block and file, object being more recent with the MinIO engine embedded. HyperOS implements a container-based layer to plug file and object services. The major and key feature with this release is the global namespace and multi-chassis capability across homogeneous access methods. For instance a GNS based on 3 chassis for NFS and 2 of these 3 also offer a S3 GNS so it means the array can scale internally and externally.

The team also shared the result of its collaboration with Nvidia around GPU Direct Storage that shows interesting performance numbers both in file and block mode.

Qumulo, leader in scale-out NAS, shared some interesting news about their 2020 year reaching a new level with 200 billions files under management and more than 1EB of capacity licensed. The company also has reached a unicorn status and confirms its leadership position in the Coldago Map 2020 for File Storage.

One surprising moment was when the team mixed disk file systems and distributed ones comparing WAFL or ZFS with OneFS or the Qumulo internal file system. It was a slide we saw in 2017 during a previous tour...

Beyond that, the company has clearly made a marketing effort to rename product line and associated data services around Cloud Q and Server Q. And among recent features we notice instant upgrades, GUI for Shift, AWS Outposts support, machine learning-based NVMe cache and full encryption with AES-256.

Qumulo continues its effort with AWS introducing several new configurations available on the marketplace making things simpler for users who wish to deploy and start a Qumulo instance for limited period of time of longer.

The company has understood clearly that its growing market adoption will continue with key global and regional partners and we expect some new announcements in the coming months about new oem and enterprise resellers.

StrongBox Data, an alternative unstructured data management player, sees its adoption rolling positively signing impressive deals in terms of capacity and complexity. As a reminder StrongLink is a data management software providing cross-platform global namespace dedicated to unstructured data leveraging intelligent data policies wherever data reside on-premises or cloud-based, disk or tape systems. For large configurations, it seems obvious to organize data across different existing existing and new tiers.

The team shared recent case studies deployed at NASA, DKRZ, Library of Congress or a german company starting with a B.

At NASA, objectives were to provide a central catalogue of all digital asset, give global access to users from hundreds of projects across the nation with the capability to tag and move files across tiers, offer API access to connect Elastisearch and finally report usage for different departments.

For DKRZ - German Climate Computing Center - the first challenge was to replace HPSS (9th largest site on the planet) and introduce LTFS for 150PB of data and the second associated wish was to manage 120PB per year in HPC workflows. For such deployment, StrongLink deployed as a 10+ scale-out engine, introduces 1PB of cache on fast NVMe-based disk storage and also connect a remote site for DR.

At the B. site, unstructured data grows exponentially to reach today 2PB of new data every day and the goal is to migrate transparently a minimum of 2PB of data per day to sustain current configuration. This configuration is impressive connected to Spectra Logic TFinity with a rapid objective of 1EB.

VAST Data updated us on the business and revealed an extraordinary trajectory for a storage company. Already identified as a blitzscaler by Coldago Research, being also a storage unicorn, the team has confirmed $150 million of bookings after just a few quarters of operations.

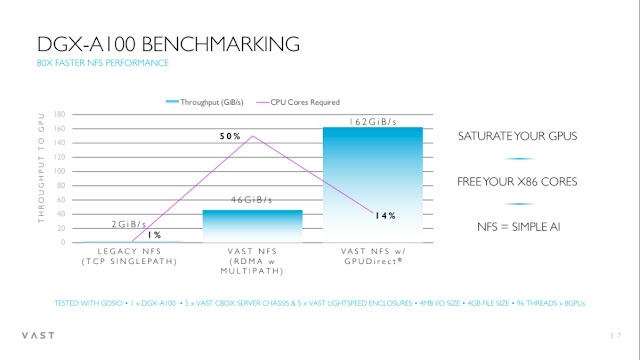

The company has continued to make great progress on the product - Universal Storage - that beats record in various domain. The product has proven that fast NFS is possible, listed in the IO500 ranking, and shows impressive numbers with Nvidia GPU Direct Storage. At scale users didn't have lots of alternatives before VAST Data as they all converged towards parallel file systems model especially for scientific and technical needs. The beauty of this approach resides in the industry standard file haring protocols support with NFS and SMB making things completely transparent for the client machines and applications.

The second key message from the team was around one global full flash storage platform spanning primary and secondary storage needs supported by a ratio $/TB better than HDD thanks to unique data reduction techniques and erasure coding algorithms. The disaggregated shared everything architecture built around QLC/Flash, Optane, NVMe-oF and a cache less model help the firm to reach new level not seen before in scalable NAS.

Started as a vertical solution validated for a few use cases, VAST Data has added more use cases to become a horizontal solution covering now plenty of them. For AI, a high intensive read environment, VAST Data released Lightspeed with compelling attributes such NFS over RDMA.

0 commentaires:

Post a Comment